Generative AI & Future of AI at Work (invitation, #AIslovakIA)

This will not be some typical post about ultimate battle of Microsoft vs. Google that is coming or #ChatGPT/OpenAI vs. #GoogleBard/Google Bard AI about advantages or disadvantages...

In fact the topic is & will be much broader and first of all (also to celebrate first 250+ of you that subscribed to this newsletter I have an invitation to join us for a conference on this topic tomorrow, 20th of February, 2:00pm CET, ONLINE here: https://video.nti.sk/join/?hp3fr09cjhg).

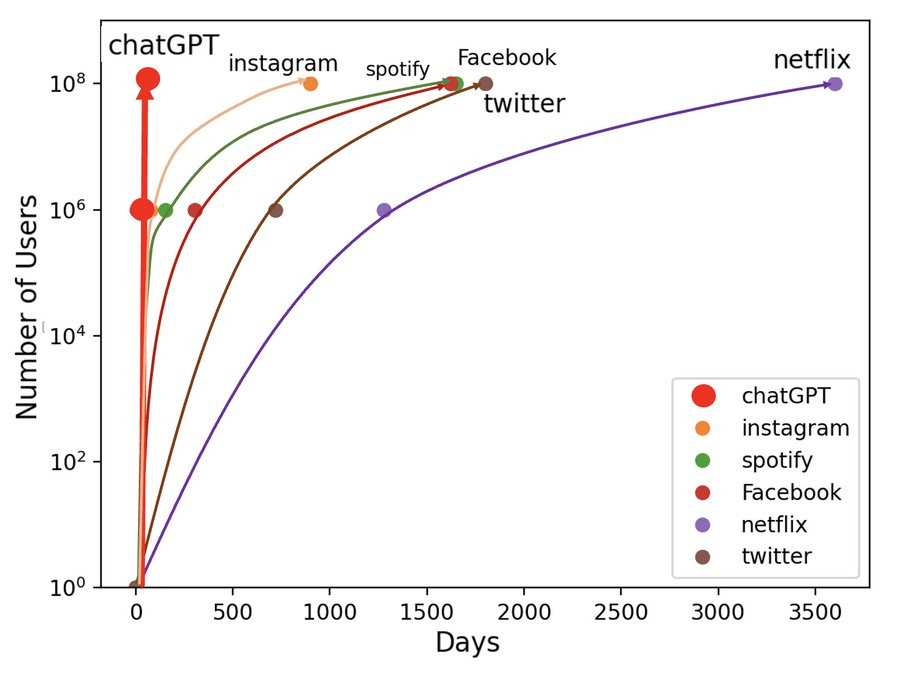

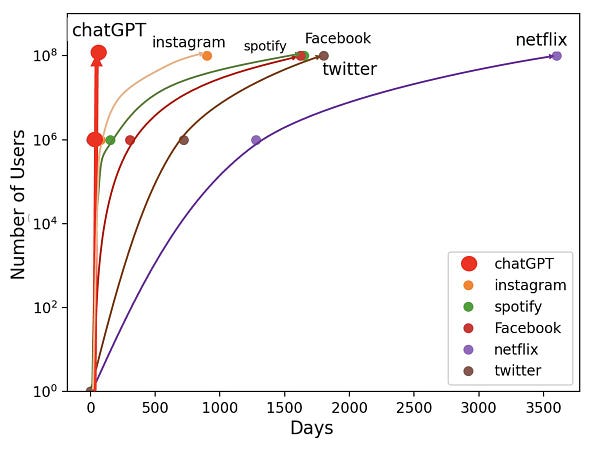

But first of all, why should you even care (about this topic/ChatGPT & Generative AI)? Well... since you're asking, this (IMHO figure worth thousands of words):

Figure: Number of days to 1M and 100M users: ChatGPT vs * instagram * spotify * facebook * netflix * twitter

Source:

Now... why you should care & attend our (for GapData Institute I will be there as a speaker as well) upcoming event on GenerativeAI by #AIslovakIA AIslovakIA (🇸🇰 National platform for the AI development in Slovakia / btw. "fun fact", but did you know that from #Slovakia came/was born/we gave the world also Andrej Karpathy?/ ex-Director of AI @ Tesla & now returning to OpenAI, which he co-founded to push the development of Strong AI/AGI/HLAI even further with ChatGPT as intermediate product towards the main goal/the real... Strong AI)

Speakers:

prof. Peter Sinčák, Head of Scientific Board @ www.AISlovakia.com - National platform for AI development in Slovakia #AIslovakIA & Center for Intelligent technologies, Department of Cybernetics & AI, Faculty of Electrical Engineering & Informatics @ Technická Univerzita v Košiciach [More about Peter here: https://petersincak.com/]

doc. Martin Takáč, Associate professor @ Centre for Cognitive Science, Department of Applied Informatics, Faculty of Mathematics, Physics and Informatics, Comenius University & senior member of the Cognition and Neural Computation Research Group and a founding member of Slovak Cognitive Science Society. [More about Martin here: https://cogsci.fmph.uniba.sk/~takac/]

Jozef Kováč, Co-Founder @ Ayanza: Teamwork At Its Best & in past also Co-Founder @ Exponea [More about Jozef here: https://www.crunchbase.com/person/jozo-kovac & https://www.linkedin.com/in/jozokovac/]

Radovan Kavický, President & Principal Data Scientist GapData Institute, Data Science Instructor @ DataCamp, Basecamp.ai & Skillmea + Founder of PyData Slovakia #PyDataBA #PyDataSK [More about Radovan/me here: https://www.datacamp.com/instructors/radovankavicky & https://www.linkedin.com/in/radovankavicky/]

Moderator:

Ondrej Macko, Member of @EISA Expert Group & Editor-in-Chief TOUCHIT - Digitálny svet na dotyk & former Editor-in-Chief @ PCRevue [More about Ondrej here: https://muckrack.com/ondrej-macko & https://rocketreach.co/ondrej-macko-email_39845305]

[20th of February, from 2:00pm CET, ONLINE/WebEx here, in Slovak + w/ translation to other languages: https://video.nti.sk/join/?hp3fr09cjhg

RSVP here: https://www.meetup.com/slovak-artificial-intelligence-meetup/events/291493399/]

On LinkedIn also here: https://www.linkedin.com/events/potrebujemegenerat-vnychchatbot7032283288777981952/comments/

Event also here (FaceBook): https://www.facebook.com/events/httpsvideontiskjoinhp3fr09cjhg/aislovakiaaislovakia-radio-aislovakia-generat%C3%ADvne-chatboty-zak%C3%A1%C5%BEeme-ich-pom%C3%B4%C5%BEe-c/501471682145145/

In separate post after event I will go into this topic further, but IMHO it is important at least make it clear before discussion what ChatGPT really is:

1. Generative AI (definition)

"Generative AI (GenAI) is the part of Artificial Intelligence that can generate all kinds of data, including audio, code, images, text, simulations, 3D objects, videos, and so forth. It takes inspiration from existing data, but also generates new and unexpected outputs, breaking new ground in the world of product design, art, and many more. Much of it, thanks to recent breakthroughs in the field like (Chat)GPT and Midjourney."

(via) https://generativeai.net/

2. ChatGPT (definition)

Within ChatGPT the "Chat" part is self-explanatory and GPT is short for "generative pre-trained transformer". Generative classifies this type of AI into the type that simply generate new content (text output in this case) and is pre-trained which means that it was created on top of a lot of data but it is in fact static (no new information ONLINE is taken on-the-fly as input after it was trained).

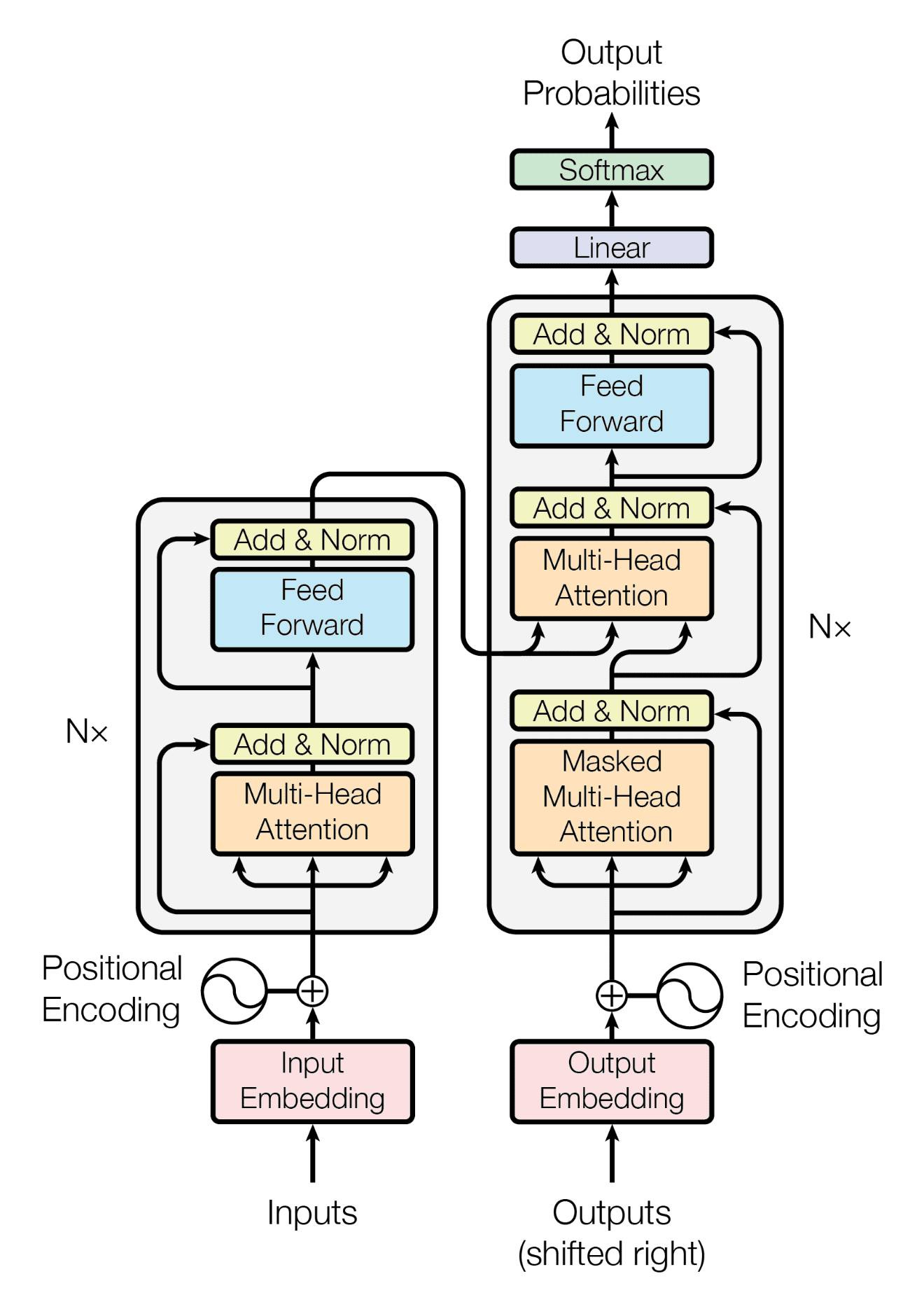

But the most important part of GPT is the Transformer and it look like this (from one of the most important papers, that "gave birth" to all of this new area of AI research that we now call GenerativeAI or GenAI):

The Transformer (via) Attention Is All You Need (2017): https://arxiv.org/abs/1706.03762

3. What it does (you have all seen it in action/but what is happening behind all that)?

And what it does? As is clear from the picture/figure it simply takes inputs (all the data/in this case or type of AI mostly texts/area of NLP or Natural language processing) and first 6 layers of encoder/feed-forward neural network is activated. First there is a multi-head self-attention mechanism (attention function means simply mapping a query and a set of key-value pairs to an output, where the query, keys, values + final output as well are simply vectors/series of data points and self-attention is a way to learn a weighting function and let the features decide about their importance themselves), and the second part is a simple, position-wise feed-forward neural network. And 2nd part of this "Transformer" is simply decoder (inputs from the first, encoder part goes in as inputs within the next 6 layers of feed-forward neural network) and at the end we have linear part/transformation + softmax layer simply takes all the classes from the learned model and gives them probabilities/to all the decoder output or all of what the Transformer that was "learned" within the process of training. That's it... what have you expected, something more? Well, the output to your prompts/inputs is from the behind look, simply series of outputs (with probabilities) and ChatGPT/The transformer within the chat will give you simply the answer with the highest probability based on your inputs/prompts after training. Also all the model of transformer is auto-regressive (it means that it calculates regression from the variable against itself/or in other words outputs of one layer is input within another).

4. Dunning-Kruger & ChatGPT (it's funny, because it's true)

Another way of explaining of ChatGPT inner-works is through the lens of Dunning-Kruger effect (true story/funny because it is true... the more you learn about ChatGPT the more useful it becomes / you learn the better way how to use it correctly, as a tool/how it was also designed).

Picture/Figure (via) https://www.instagram.com/data_science_school/

Simply said... ChatGPT is a language model and in fact, not just some language model, but by definition... LLM (Large Language model) and that means basically a deep learning algorithm that can summarize, recognize, translate, predict and generate text and other content based on knowledge gained from massive datasets (in this case a lot of text...).

Large language models are among the most successful applications of transformer models.

In addition to accelerating natural language processing applications — like translation, chatbots and AI assistants — large language models are used in healthcare, software development and use cases in many other fields.

And this is how it works:

Figure (via) https://jalammar.github.io/illustrated-transformer/

Figure (via) https://jalammar.github.io/illustrated-transformer/

In coming posts or also during the event tomorrow (stay tuned, more to come) you will you learn also:

Why I think (+not only me) that ChatGPT is not really innovative

[in fact we have these technologies for years & were expecting next AI winter soon, w/ ChatGPT & Google Bard on the way... it all changed & what to do now]

What is coming next / what will be the "next big thing" in #GenAI / #GenerativeAI and what changes it will bring to the whole/global society

That's all folks... this might help as a first teaser: